Vehicle Detection and Tracking from Video frame Sequence

Manisha Chaple, Prof.S.S.Paygude

Abstract—Video and image processing has been used for traffic surveillance, analysis and monitoring of traffic conditions in many cities and urban areas. Motion tracking is one of the most active research titles in computer vision field. This paper aims to Detect and track the vehicle from the video frame sequence The vehicle motion is detected and tracked along the frames using Optical Flow Algorithm and Background Subtraction technique. The distance travelled by the vehicle is calculated using the movement of the centroid over the frames. Many proposed motion tracking techniques are based on template matching, blob tracking and contour tracking. A famous motion tracking and estimation technique, optical flow, however, is not being widely used and tested for the practicability on traffic surveillance system. Thus, to analyze the reliability and practicability of it, this research project proposed the idea of implementing optical flow in traffic surveillance system, and will evaluate its performance. The results of tracking using optical flow is proving that optical flow is a great technique to track the motion of moving object, and has great potential to implement it into traffic surveillance system.

Index Terms— Vehicle detection, video sequence, computer vision, background modelling, Traffic Monitoring, Tracking, Optical flow, Motion estimation, Estimate Vehicle Speed

—————————— ——————————

1 INTRODUCTION

IEDO surveillance systems have long been in use to mon- itor security sensitive areas. The making of video surveil- lance systems "smart" requires fast, reliable and robust

algorithms for moving object detection, classification, tracking and activity analysis. Moving object detection is the basic step for further analysis of video. It handles segmentation of mov- ing objects from stationary background objects. This not only creates a focus of attention for higher level processing but also decreases computation time considerably. Commonly used techniques for object detection are background subtraction, statistical models, temporal differencing and optical flow. Due to dynamic environmental conditions such as illumination changes, shadows and waving tree branches in the wind ob- ject segmentation is a difficult and significant problem that needs to be handled well for a robust visual surveillance sys- tem.

The next step in the video analysis is tracking, which can be simply defined as the creation of temporal correspondence among detected objects from frame to frame. This procedure provides temporal identification of the segmented regions and generates cohesive information about the objects in the moni- tored area such as trajectory, speed and direction. The output produced by tracking step is generally used to support and enhance motion segmentation, object classification and higher level activity analysis.

The final step of the smart video surveillance systems is to

algorithms for moving object detection, classification, tracking and activity analysis. Moving object detection is the basic step for further analysis of video. It handles segmentation of mov- ing objects from stationary background objects. This not only creates a focus of attention for higher level processing but also decreases computation time considerably. Commonly used techniques for object detection are background subtraction, statistical models, temporal differencing and optical flow. Due to dynamic environmental conditions such as illumination changes, shadows and waving tree branches in the wind ob- ject segmentation is a difficult and significant problem that needs to be handled well for a robust visual surveillance sys- tem.

The next step in the video analysis is tracking, which can be simply defined as the creation of temporal correspondence among detected objects from frame to frame. This procedure provides temporal identification of the segmented regions and generates cohesive information about the objects in the moni- tored area such as trajectory, speed and direction. The output produced by tracking step is generally used to support and enhance motion segmentation, object classification and higher level activity analysis.

The final step of the smart video surveillance systems is to

————————————————

Prof S.S. Paygude. is currently pursuing PhD inComputer engineering in

Pune University, India, E-mail: shilpa.paygude@mitpune.edu.in

recognize the behaviours of objects and create high-level se- mantic descriptions of their actions.

The outputs of these algorithms can be used both for provid- ing the human operator with high level data to help him to make the decisions more accurately and in a shorter time and for online indexing and searching stored video data effective- ly. The advances in the development of these algorithms would lead to breakthroughs in applications that use visual surveillance.

recognize the behaviours of objects and create high-level se- mantic descriptions of their actions.

The outputs of these algorithms can be used both for provid- ing the human operator with high level data to help him to make the decisions more accurately and in a shorter time and for online indexing and searching stored video data effective- ly. The advances in the development of these algorithms would lead to breakthroughs in applications that use visual surveillance.

2. MOTIVATION

The objective of video tracking is to map target objects in con- secutive video frames. The mapping can be especially difficult when the objects are moving relatively fast to the frame rate. Another circumstance that raises the obscurity of the problem is when the tracked object changes orientation over time.

3. APPLICATIONS

1. Automated Video surveillance: Designed to monitor the movement in an area to identify moving object.

2. Robot Vision: The moving objects obstacles are identified in path to avoid collision in real time object tracking system.

3. Traffic Monitoring: In case of breaks of traffic rules is moni- tored using cameras is tracked down easily.

4. Animation: Using object tracking algorithm can extend to animation.

2. Robot Vision: The moving objects obstacles are identified in path to avoid collision in real time object tracking system.

3. Traffic Monitoring: In case of breaks of traffic rules is moni- tored using cameras is tracked down easily.

4. Animation: Using object tracking algorithm can extend to animation.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 2

ISSN 2229-5518

4. LITERATURE SURVEY

A.Gyaourova, C.Kamath, S.and C.Cheung has studied the block matching technique for object tracking in traffic scenes. A motionless airborne camera is used for video capturing. They have discussed the block matching technique for differ- ent resolutions and complexes [5].

Yoav Rosenberg and Michael Werman explain an object-

tracking algorithm using moving cameras. The algorithm is

based on domain knowledge and motion modeling. Dis-

placement of each point is assigned a discreet probability dis-

tribution matrix. Based on the model, image registration step is carried out. The registered image is then compared with the background to track the moving object [6].

A.Turolla, L.Marchesotti and C.S.Regazzoni discuss the cam- era model consisting of multiple cameras. They use object fea- tures gathered from two or more cameras situated at different

locations. These features are then combined for location esti- mation in video surveillance systems [7].

One simple feature based object tracking method is explained by Yiwei Wang, John Doherty and Robert Van Dyck.The method first segments the image into foreground are gathered

for each object of interest. Then for each consecutive frame the changes in features are calculated for various possible direc- tions of movement. The one that satisfies certain threshold conditions is selected as the position of the object in the next frame [8].

Ci gdem Ero glu Erdem and Bulent San have discussed a feedback –based method for object tracking in presence of oc- clusion. In this method several performance evaluation measures for tracking are placed in a feedback loop to track non rigid contours in a video sequence [9].

By tracking the object in motion, occlusion Alok K.Watve and

Shamik Sural explain and compares the result with various algorithms like Kalman Filtering for foreground extraction and camera modeling for Background Subtraction with multi- ple cameras in both fixed and moving object. Extracting the feature of tracking object in Block matching calculates the frame difference; in exploiting the domain Knowledge the author calculate the motion parameters with the displacement of scale parameters and displacement vector. In compressed domain object tracking method is calculated using bounding rectangle of an object with different frames by using histo- gram [10].

P.Subashini,M.Krishnaveni,Vijay Singh discuss the object

tracking in Frame Rate Display and Color conversion with

background subtraction with different techniques like Estimat-

ing median overtime, Computing median overtime, Estimat-

ing moving objects. With the comparison of various segmenta- tion algorithms like Sobel Operator, Canny operator and Rob- erts operator the object is segmented through edge detection and its derivates are calculated. Through region filtering in color segmentation color samples for skin is processed and is computed to mean and covariance over color channels. Object tracking for moving object through motion vector is calculated through optical flow algorithm and Blob analysis for binary feature of an image is calculated. Tracking of object is

measures by the position done by tracking in region filtering and the information of the object is created an estimation of new object [11].

Background subtraction and motion estimation for tracking an object.DCT domain background subtraction in Y plane is used to locate candidate objects in subsequent I-frames after a user has marked an object of interest in the given frame.DCT do- main histogram matching using Cb and Cr planes and motion vectors are used to select the target object from the set of can- didate objects [12].

A tracking and detecting algorithm that predicts the object by

predictiong the object boundary using block motion vectors

followed by updating the contour using occlusions/disclosing

detection. Another group of algorithms deals with object

tracking using adaptive particle filter [13, 14], kalman filter

[15-17].

The efficiency by reducing the number is computations for

object tracking in video frames is tracked with the adaptive

particle filter, kalman filter and for color frames the image is

captured into transform to YCbCr space with the wavelets coefficient transform for detecting moving objects [18]

A weighted adaptive scalable hierarchical (WASH) tree based video coding algorithm is proposed with low-memory usage. The standard coding uses three separate lists to store and or-

ganize tree data structures and their significance, which can grow large at high rate and consume large amounts of memory. In the proposed algorithm, value added adaptive scale down operator discards unnecessary lists and the pro- cess length of the sorting phase is shortened to reduce coding time and memory usage. Spatial and temporal scalability can be easily incorporated into the system to meet various types of display parameter requirements and self-adapting rate alloca- tions are automatically achieved. Results show that the pro- posed method reduces memory usage, run time and improves PSNR [19].

A novel approach for car detection and classification is pre- sented, to a whole new level, by devising a system that takes the video of a vehicle as input, detects and classifies the vehi- cle based on its make and model. It takes into consideration four prominent features namely Logo of vehicle, its number plate, color and shape. Logo detector and recognizer algo- rithms are implemented to find the manufacturer of the vehi- cle. The detection process is based on the Adaboost algorithm, which is a cascade of binary features to rapidly locate and de- tect logos. The number plate region is localized and extracted using blob extraction method. Then color of the vehicle is re- trieved by applying Haar cascade classifier to first localize on the vehicle region and then applying a novel algorithm to find color. Shape of the vehicle is also extracted using blob extrac- tion method. The classification is done by a very efficient algo- rithm called Support vector machines. Experimental results show that our system is a viable approach and achieves good feature extraction and classification rates across a range of videos with vehicles under different conditions. Edge match- ing, Divide-and-Conquer search, Gradient matching, Histo- grams of receptive field responses, Pose clustering, SIFT; SURF etc are some of the approaches applied. All these meth-

Yoav Rosenberg and Michael Werman explain an object-

tracking algorithm using moving cameras. The algorithm is

based on domain knowledge and motion modeling. Dis-

placement of each point is assigned a discreet probability dis-

tribution matrix. Based on the model, image registration step is carried out. The registered image is then compared with the background to track the moving object [6].

A.Turolla, L.Marchesotti and C.S.Regazzoni discuss the cam- era model consisting of multiple cameras. They use object fea- tures gathered from two or more cameras situated at different

locations. These features are then combined for location esti- mation in video surveillance systems [7].

One simple feature based object tracking method is explained by Yiwei Wang, John Doherty and Robert Van Dyck.The method first segments the image into foreground are gathered

for each object of interest. Then for each consecutive frame the changes in features are calculated for various possible direc- tions of movement. The one that satisfies certain threshold conditions is selected as the position of the object in the next frame [8].

Ci gdem Ero glu Erdem and Bulent San have discussed a feedback –based method for object tracking in presence of oc- clusion. In this method several performance evaluation measures for tracking are placed in a feedback loop to track non rigid contours in a video sequence [9].

By tracking the object in motion, occlusion Alok K.Watve and

Shamik Sural explain and compares the result with various algorithms like Kalman Filtering for foreground extraction and camera modeling for Background Subtraction with multi- ple cameras in both fixed and moving object. Extracting the feature of tracking object in Block matching calculates the frame difference; in exploiting the domain Knowledge the author calculate the motion parameters with the displacement of scale parameters and displacement vector. In compressed domain object tracking method is calculated using bounding rectangle of an object with different frames by using histo- gram [10].

P.Subashini,M.Krishnaveni,Vijay Singh discuss the object

tracking in Frame Rate Display and Color conversion with

background subtraction with different techniques like Estimat-

ing median overtime, Computing median overtime, Estimat-

ing moving objects. With the comparison of various segmenta- tion algorithms like Sobel Operator, Canny operator and Rob- erts operator the object is segmented through edge detection and its derivates are calculated. Through region filtering in color segmentation color samples for skin is processed and is computed to mean and covariance over color channels. Object tracking for moving object through motion vector is calculated through optical flow algorithm and Blob analysis for binary feature of an image is calculated. Tracking of object is

measures by the position done by tracking in region filtering and the information of the object is created an estimation of new object [11].

Background subtraction and motion estimation for tracking an object.DCT domain background subtraction in Y plane is used to locate candidate objects in subsequent I-frames after a user has marked an object of interest in the given frame.DCT do- main histogram matching using Cb and Cr planes and motion vectors are used to select the target object from the set of can- didate objects [12].

A tracking and detecting algorithm that predicts the object by

predictiong the object boundary using block motion vectors

followed by updating the contour using occlusions/disclosing

detection. Another group of algorithms deals with object

tracking using adaptive particle filter [13, 14], kalman filter

[15-17].

The efficiency by reducing the number is computations for

object tracking in video frames is tracked with the adaptive

particle filter, kalman filter and for color frames the image is

captured into transform to YCbCr space with the wavelets coefficient transform for detecting moving objects [18]

A weighted adaptive scalable hierarchical (WASH) tree based video coding algorithm is proposed with low-memory usage. The standard coding uses three separate lists to store and or-

ganize tree data structures and their significance, which can grow large at high rate and consume large amounts of memory. In the proposed algorithm, value added adaptive scale down operator discards unnecessary lists and the pro- cess length of the sorting phase is shortened to reduce coding time and memory usage. Spatial and temporal scalability can be easily incorporated into the system to meet various types of display parameter requirements and self-adapting rate alloca- tions are automatically achieved. Results show that the pro- posed method reduces memory usage, run time and improves PSNR [19].

A novel approach for car detection and classification is pre- sented, to a whole new level, by devising a system that takes the video of a vehicle as input, detects and classifies the vehi- cle based on its make and model. It takes into consideration four prominent features namely Logo of vehicle, its number plate, color and shape. Logo detector and recognizer algo- rithms are implemented to find the manufacturer of the vehi- cle. The detection process is based on the Adaboost algorithm, which is a cascade of binary features to rapidly locate and de- tect logos. The number plate region is localized and extracted using blob extraction method. Then color of the vehicle is re- trieved by applying Haar cascade classifier to first localize on the vehicle region and then applying a novel algorithm to find color. Shape of the vehicle is also extracted using blob extrac- tion method. The classification is done by a very efficient algo- rithm called Support vector machines. Experimental results show that our system is a viable approach and achieves good feature extraction and classification rates across a range of videos with vehicles under different conditions. Edge match- ing, Divide-and-Conquer search, Gradient matching, Histo- grams of receptive field responses, Pose clustering, SIFT; SURF etc are some of the approaches applied. All these meth-

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 3

ISSN 2229-5518

ods are either Appearance based methods or Feature based methods. They lag in one or the other way when it comes to real time applications. So there has been a need for creating a new system that could combine positive aspects of both the methods and increase the efficiency in tracking objects, when it comes to real life scenario. [20].

Daniel Marcus Jang proposed the Car-Rec search framework is

made up of four stages: feature extraction, word quantization,

image database search, and structural matching [21]. Car-Rec

differs from other car identification systems in that it focuses on efficiently searching a large database of car imagery, using quantized feature descriptors to find a short list of likely matches. This list is then re-ranked with a structural verifica- tion algorithm [20].

The merits of this method is that it uses SIFT in place of SURF feature extraction or Lucerne search and the structural verifi- cation algorithm may be swapped out for alternative choices. Its modular framework can be applied to general image recognition tasks, particularly with similar image search in large image databases. The demerit of this method is handling imagery with background noise information.

Daniel Marcus Jang proposed the Car-Rec search framework is

made up of four stages: feature extraction, word quantization,

image database search, and structural matching [21]. Car-Rec

differs from other car identification systems in that it focuses on efficiently searching a large database of car imagery, using quantized feature descriptors to find a short list of likely matches. This list is then re-ranked with a structural verifica- tion algorithm [20].

The merits of this method is that it uses SIFT in place of SURF feature extraction or Lucerne search and the structural verifi- cation algorithm may be swapped out for alternative choices. Its modular framework can be applied to general image recognition tasks, particularly with similar image search in large image databases. The demerit of this method is handling imagery with background noise information.

5 BACKGROUND SUBTRACTION ALGORITHM

5.1 Pre Processing Phase

Before performing any video processing operation the quality of the frame is very essential. In this phase improving the quality of frame is taken into the consideration and the video is tested with three types of noises salt and pepper noise, Gaussian noise and periodic noise. These noises have more chances to distract the quality of video frames. The each noise types are denoised using various filtering techniques and the best suited filters are taken into the consideration for different noises.

1. Create a frame sequence from a video input.

2. Take current image and previous image.

3. Take difference between them

4. Select Thresholding.

5. Perform the filtering operation to remove the noise

1. Create a frame sequence from a video input.

2. Take current image and previous image.

3. Take difference between them

4. Select Thresholding.

5. Perform the filtering operation to remove the noise

from the image.

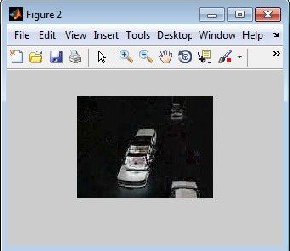

Figure2 : Background Subtraction on Genreated Frame

5.2 Segmengtation Phase

Once the Vehcile is detected using the above steps then next we have to calculate the centroid of the detecd vehicle and draw the bounidg box for this we will use the regionprops function of the MATLAB. The other steps to track the Vehicle are as follows:

To classify the foreground object from the background seg- mentation operation is performed by background modeling technique, frame differencing. It takes less processing time. The steps are:

1. Read the first input frame and consider it as back- ground frame

2. Convert the background frame to grey scale

3. Set the Threshold value

4. Set the variables for frame size such as width and

height of background frame

5. Perform the following processing starting form the se-

cond frame till the end of last frame in the video

6. Read the frame

7. Convert the frame to grey scale

8. Find the frame difference between current frame with previous frame

9. Diff_frame = frame t – frame t-1

10. Classify the pixel whether it belongs to background or

foreground

If the value of Diff_frame > Threshold value then

Pixel belongs to foreground

Store it in a new foreground vector array

Else

Set the corresponding foreground vector value to zero

End If

Now makes the current frame as previous frame and next

frame as current frame

To classify the foreground object from the background seg- mentation operation is performed by background modeling technique, frame differencing. It takes less processing time. The steps are:

1. Read the first input frame and consider it as back- ground frame

2. Convert the background frame to grey scale

3. Set the Threshold value

4. Set the variables for frame size such as width and

height of background frame

5. Perform the following processing starting form the se-

cond frame till the end of last frame in the video

6. Read the frame

7. Convert the frame to grey scale

8. Find the frame difference between current frame with previous frame

9. Diff_frame = frame t – frame t-1

10. Classify the pixel whether it belongs to background or

foreground

If the value of Diff_frame > Threshold value then

Pixel belongs to foreground

Store it in a new foreground vector array

Else

Set the corresponding foreground vector value to zero

End If

Now makes the current frame as previous frame and next

frame as current frame

11.Loop until end of the frame in the video.

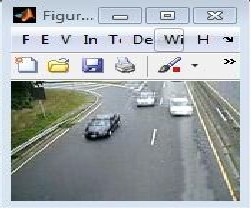

Figure 1: A Frame generate from video

5.3 Feature Extraction

The method of ruling image displacements which is easiest to understand is the feature-based approach. This finds features

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 4

ISSN 2229-5518

(for example, image edges, corners, and other structures well localized in two dimensions) and tracks these as they move from frame to frame. The act of feature extraction, if done well, will both reduce the amount of information to be processed (and so reduce the workload), and also go some way towards obtaining a higher level of understanding of the scene.

1. Find the area of the object, centroid and bounig box parameter using the regionprops function of MATLAB.

2. Locate the Bounding box and centroid on the object.

1. Find the area of the object, centroid and bounig box parameter using the regionprops function of MATLAB.

2. Locate the Bounding box and centroid on the object.

6 EXPERIMENTAL RESULT OF BACKGROUND

SUBTRACTION

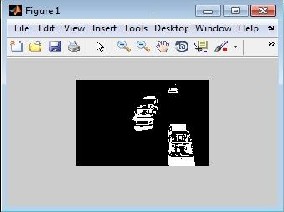

In figure2, the background subtraction is done on the image. And foreground subtraction is applied on the rest image. The frame difference is taken from the current frame and previous frame the resultant image is shown in figure 3

Figure3: Frame Diffrencing of current and previous Frame

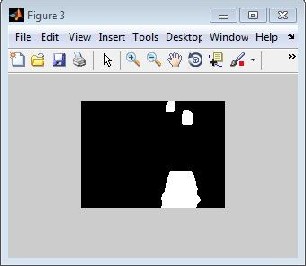

After this the image has to undergo the thresholding. The thresholding is done using the Otsu’s method. The figure 4 shows the output of the image after the thresholding.

Figure4: Thresholded Image output

Once the Thresholding is done we got the output and then we have to perform the morphological operation to remove the noise present in the image. For this purpose we used median filter on the thresholded image. Figure5 shows the filtered image.

Figure5: After applying the median Filter on image

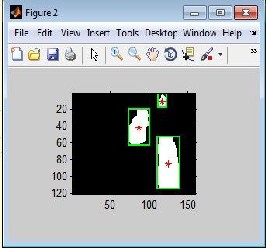

By using the regionprops function of the MATLAB we can track the vehicle by creating a bounding box and centroid. In figure6, the detected and tracked vehicle is shown.

Figure6:Centroid and Bounding Box on detected vehicle

Figure3: Frame Diffrencing of current and previous Frame

After this the image has to undergo the thresholding. The thresholding is done using the Otsu’s method. The figure 4 shows the output of the image after the thresholding.

Figure4: Thresholded Image output

Once the Thresholding is done we got the output and then we have to perform the morphological operation to remove the noise present in the image. For this purpose we used median filter on the thresholded image. Figure5 shows the filtered image.

Figure5: After applying the median Filter on image

By using the regionprops function of the MATLAB we can track the vehicle by creating a bounding box and centroid. In figure6, the detected and tracked vehicle is shown.

Figure6:Centroid and Bounding Box on detected vehicle

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 5

ISSN 2229-5518

The trajectory of the Tracked vehicle can be seen in below figure.

7 OPTICAL FLOW ALGORITHM

7.1 Vehicle Detection Phase

The object detection is performed by extracting the features of each object. Based on the dimension of every object it has its own specific feature. The feature extraction algorithm applied in this paper is optical flow which is used to detect and point out object in each frame sequence. In this method, the pixels are calculated based on the vector position and it is compared in frame sequences for the pixel position. In general the mo- tion is correspond to vector position of pixels. Finding optic flow using edges has the advantage (over using two dimen- sional features) that two dimensional feature detection.

7.2 Motion Estimation

Optical flow is used to compute the motion of the pixels of an im- age sequence. It provides a dense (point to point) pixel correspond- ence. The problem is to determine where the pixels of an image at time t are in the image at time t+1. Large number of applications uses this method for detecting objects in motion

7.3 Optical flow Algorihtm

Optical flow computation is based on two assumptions:

The experimental brightness of any object point is constant over

time. Close to points in the image plane move in a similar manner

(the velocity smoothness constraint). Suppose we have a continu-

ous image; f{x,y,t) refers to the gray-level of (x,y) at time t. Repre- senting a dynamic image as a function of position and time permits it to be expressed.

Assume each pixel moves but does not change intensity

Pixel at location (x, y) in frame1 is pixel at (x+Δx, y+Δy) in

frame2.

Optic flow associates displacement vector with each pixel. The optical flow methods try to calculate the motion between

two image frames which are taken at times t and t + δt at eve-

ry voxel position. These methods are called differential since

they are based on local Taylor series approximations of the image signal; that is, they use partial derivatives with respect to the spatial and temporal coordinates.

Assume I (x, y, t) is the center pixel in a n×n neighborhood and moves by δx, δy in time δt to I (x+δx, y +δy, t+δt). Since I (x, y, t) and I (x + δx, y + δy, t + δt) are the images of the same point (and therefore the same) we have:

I (x, y, t) = I (x + δx, y + δy, t + δt) (1) Solving Eq (1) gives

IxVx + IyVy = − It (2)

Where, Ix,Iy,It are intensity derivative in x,y,t respectively and Vx,Vy are the x and y components of the velocity or optical flow of I(x,y,t). Eq(2) is then solved using Horn-Schunck method.

The video sequence is captured using a fixed camera. The Op- tical Flow block using the Horn – Schunck algorithm (1981) estimates the direction and speed of object motion from one video frame to another and returns a matrix of velocity com- ponents . Various image processing techniques such as thresholding, median filtering are then sequentially applied to obtain labeled regions for statistical analysis.

Thresholding is the simplest method of image segmentation. The process of thresholding returns a threshold image differ- entiating the objects in motion (in white) and static back- ground (in black). More precisely, it is the process of assigning a label to every pixel in an image such that pixels with the same label share certain visual characteristics. A Median Filter is then applied to remove salt and pepper noise from the threshold image without significantly reducing the sharpness of the image Median filtering is a simple and very effective noise removal filtering process and an excellent filter for elim- inating intensity spikes.

The experimental brightness of any object point is constant over

time. Close to points in the image plane move in a similar manner

(the velocity smoothness constraint). Suppose we have a continu-

ous image; f{x,y,t) refers to the gray-level of (x,y) at time t. Repre- senting a dynamic image as a function of position and time permits it to be expressed.

Assume each pixel moves but does not change intensity

Pixel at location (x, y) in frame1 is pixel at (x+Δx, y+Δy) in

frame2.

Optic flow associates displacement vector with each pixel. The optical flow methods try to calculate the motion between

two image frames which are taken at times t and t + δt at eve-

ry voxel position. These methods are called differential since

they are based on local Taylor series approximations of the image signal; that is, they use partial derivatives with respect to the spatial and temporal coordinates.

Assume I (x, y, t) is the center pixel in a n×n neighborhood and moves by δx, δy in time δt to I (x+δx, y +δy, t+δt). Since I (x, y, t) and I (x + δx, y + δy, t + δt) are the images of the same point (and therefore the same) we have:

I (x, y, t) = I (x + δx, y + δy, t + δt) (1) Solving Eq (1) gives

IxVx + IyVy = − It (2)

Where, Ix,Iy,It are intensity derivative in x,y,t respectively and Vx,Vy are the x and y components of the velocity or optical flow of I(x,y,t). Eq(2) is then solved using Horn-Schunck method.

The video sequence is captured using a fixed camera. The Op- tical Flow block using the Horn – Schunck algorithm (1981) estimates the direction and speed of object motion from one video frame to another and returns a matrix of velocity com- ponents . Various image processing techniques such as thresholding, median filtering are then sequentially applied to obtain labeled regions for statistical analysis.

Thresholding is the simplest method of image segmentation. The process of thresholding returns a threshold image differ- entiating the objects in motion (in white) and static back- ground (in black). More precisely, it is the process of assigning a label to every pixel in an image such that pixels with the same label share certain visual characteristics. A Median Filter is then applied to remove salt and pepper noise from the threshold image without significantly reducing the sharpness of the image Median filtering is a simple and very effective noise removal filtering process and an excellent filter for elim- inating intensity spikes.

7.4 Vehcile Tracking using optical flow

Object tracking refers to the process of tracing the moving ob- ject in progression of frames. The task of tracking is performed by feature extraction of objects in a frame and discovering the objects in sequence of frames. By using the location values of object in each frame, we can determine the position and veloc- ity of the moving object.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 6

ISSN 2229-5518

8 EXPERIMENTAL RESULT OF OPTICAL FLOW

8.1 Noise Removal Technique

In this phase @D median Filteris used to remove the nosie pre- sent in the video and the respective frames or images.Test was conducted on different filters among them the best suited filter are for Gaussian noise the wiener filter best suits , Salt and Pepper noise is effectively removed by Median filter and for the periodic noise 2D FIR filter performs better than other fil- ters. The results obtained are shown in the figures.

Figure7: The above figure uses the 2D median Filter to re move the salt pepper noise present in the image.

8.2 Segmentation Technique

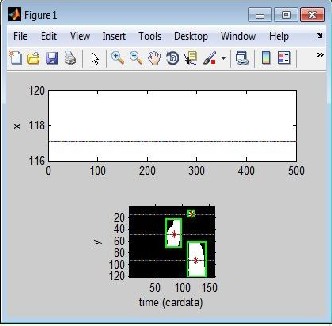

The segmentation technique is used to group the similar ob- jects by performing background subtraction using Frame dif- ference. This technique best suited for moving objects segmen- tation. The result shows the input image, the previous frame and after applying the frame difference and subtracting the background objects the foreground is alone displayed the re- sult is displayed in the figures.

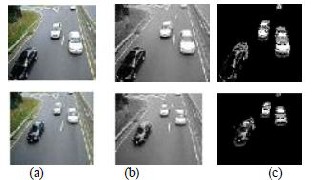

From the figure (a) shows the original video, (b) shows that the video is converted to grey scale and (c) shows the segmented out- put of the video in performing the frame difference of background subtraction.

From the figure (a) shows the original video, (b) shows that the video is converted to grey scale and (c) shows the segmented out- put of the video in performing the frame difference of background subtraction.

8.3 Object Identification and Object Tracking

Object tracking in video is performed by applying the optical flow method to set the motion vector of the moving objects then finding the threshold of each object and detecting and tracking the objects which exceeds the threshold value as moving objects. The experi- mental results are shown in the figure.

Figure: Object detection and tracking using Optical flow

From the figure (a) shows the original video, (b) shows optical flow method to set the motion vector, (c) shows the object and detecting and tracking the objects which exceeds the threshold value as mov- ing objects and (d) Foreground of the moving object is detected.

Figure: Object detection and tracking using Optical flow

From the figure (a) shows the original video, (b) shows optical flow method to set the motion vector, (c) shows the object and detecting and tracking the objects which exceeds the threshold value as mov- ing objects and (d) Foreground of the moving object is detected.

9 CONCLUSION

Moving object tracking is evaluated for various surveillance and vision analysis. The first task of this paper is to improve the quality of the video by applying noise removal on the in- put video for better result. Then the second phase is segment- ing the object using background subtraction method with the help of finding frame difference method which gives a better understanding of grouping objects. In the next phase the vehi- cle detection and tracking is done using the optical flow Algo- rithm which gives the good results over the background sub- traction technique. At last the moving object centroid is iden- tified .These algorithms can also be extended for the use of real-time applications and object classification.

The paper work can be extended using the identified centroid

of the moving object the distance and velocity can be calculat- ed which helps to find out the speed of the car from the video sequence.

The paper work can be extended using the identified centroid

of the moving object the distance and velocity can be calculat- ed which helps to find out the speed of the car from the video sequence.

REFERENCES

[1] Peter Mountney, Danail Stoyanov and Guang-Zhong Yang (2010). "Three- Dimensional Tissue Deformation Recovery and Tracking: Introducing techniques based on laparoscopic or endoscopic images." IEEE Signal Processing Magazine.

2010 July. Volume: 27". IEEE Signal Processing Magazine 27 (4): 14–24.

DOI:10.1109/MSP.2010.936728.

[2] Lyudmila Mihaylova, Paul Brasnett, Nishan Canagarajan and David Bull (2007). Object Tracking by Particle Filtering Techniques in Video Sequences; In: Advances and Challenges in Multisensor Data and Information. NATO Security Through Science Series, 8. Netherlands: IOS Press. pp. 260–268. ISBN

978-1-58603-727-7.

[3] Kato, Hirokazu, and Mark Billinghurst (1999). "Marker Tracking and HMD Calibration for a Video-based Augmented Reality Conferencing System".

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 7

ISSN 2229-5518

IWAR '99 Proceedings of the 2nd IEEE and ACM International Workshop on

Augmented Reality (IEEE Computer Society, Washington, DC, USA).

[4] A.Gyaourova, C.Kamath,S.and C.Cheung-Block Matching object tracking- LLNL Technical report, October 2003.

[5] Y.Rosenberg and M.Weman-Real Time Object Tracking from a Moving Vid- eo Camera: A software approach on PC-Applications of Computer Vision ,,

1998.WACV ’98.Proceedings.

[6] A.Turolla,L.Marchesotti and C.S.Regazzoni- Multicamera Object tracking in video surveillance applications.

Y.Wang,J.Doherty and R.Van Dyck-Moving object tracking in video-Proc. Conference on Information Sciences and Systems,Princeton,NJ,March2000.

[7] Ci gdem Ero glu Erdem and Bulent San-Video Object Tracking With Feed- back of Performance Measures-IEEE Transactions on circuits and systems for video technology,vol.13,no.4,April 2003.

[8] Alok K.Watve and Shamik Sural – A Seminar on Object tracking in Video

Scenes.

[9] P.Subashini,M.Krishnaveni,Vijay Singh-Implementation of Object Tracking System Using Region Filtering Algorithm based on Simulink Blocksets,International Journal of Engineering Science and Technolo- gy(IJEST),Vol.3 No.8 August 2011. PP-6744-6750.ISSN:0975-5462.

[10] Ashwani Aggarwal,Susmit Biswas,sandeep Singh,Shamik Sural and A.K.Majundar,-Object tracking using background subtraction and motion es- timation in MPEG videos,Springer-Verlag Berlin Heidelberg,ACCV

2006,LNCS 3852,pp:121-130.

[11] Zhuohua Duan,Zixing Cain ad Jinxia Yu,”Occlusion detection and recovery in video object tracking based on adaptive particle filters,”IEEE trans.China,PP.466-469[Chinese Control and Decision Conference CCDC2009].

[12] XI Tao,Zhang shengxiu and Yan shiyuan,”A robust visual tracking approach with adaptive particle filtering,”IEEE Second Intl.Conf. on Communication Software and Networks 2010,pp:549-553.

[13] Xiaoqin Zhang,Weiming Hu,Zixiang zhao,Yan-guo Wang,Xi Li and Qingdi

Wei,”SVD based kalman particle filter for robust visual tracking,” IEEE

2008,978-1-4244-2175-6.

[14] Li Ying-hong,Pang Yi-gui,Li Zheng-xi and Liu Ya-li,”An intelligent tracking technology based on kalman and mean shift algorithm,” IEEE Second Intl.Conf. on Computer Modeling and Simulation 2010.

[15] Madhur Mehta,Chandni Goyal,M.C.Srivastava and R.C.Jain,”Real time object detection and tracking:Histogram matching and kalman filter approach,” IEEE 2010,978-1-4244-5586-7.

[16] A.Purushothaman,K.R.Shankar kumar,R.Rangarajan,A.Kandasawamy- “Compressed Novel Way of Tracking Moving Objects in Image and Video Scenes,”European Journal of Scientific Research ,Vol.64 No.3(2011),pp.353-

360.ISSN 1450-216X.

[17] G.Suresh, P.Epsiba, Dr.M.Rajaram, Dr.S.N.Sivanandam,” Image And Video Coding With A New Wash Tree Algorithm For Multimedia Services”, Jour- nal of Theoretical and Applied Information Technology, 2005 – 2009, PP: 53-

59.

[18] T. Senthil Kumar, S. N. Sivanandam,” A Modified Approach for Detecting

Car in video using Feature

[19] Extraction Techniques”, European Journal of Scientific Research, ISSN 1450-

216X Vol.77 No.1 (2012), pp.134-144.

[20] Daniel Marcus jang,Matthew Turk,”CarRec: A Real Time Car Recognition System”, WACV '11 Proceedings of the 2011 IEEE Workshop on Applications of Computer Vision.

[21] Luo Juan, Oubong Gwun “A Comparison of SIFT, PCA-SIFT and SURF”,

International Journal of Image Processing (IJIP), september 10,2008.

[22] T.Senthil kumar, Dr.S.N.Sivanandam, Adheen Ajay, and P. Krishnakuma,” An improved approach for Character Recognition in Vehicle Number plate using Eigenfeature Regularisation and Extraction Method”, International Journal of Research and Reviews in Electrical and Computer Engineering (IJRRECE) Vol. 2, No. 2, June 2012, ISSN: 2046-5149.

[23] X. Q. Ding, "Machine printed Chinese character recognition," in Handbook of Character Recognition and Document Image Analysis, H. Bunke and P.S.P. Wang, Beijing: World Scientific Publishing Company, 1997, pp. 305-329

[24] Spector, A. Z. 1989. Achieving application requirements. In Distributed Sys- tems, S. Mullender

Không có nhận xét nào:

Đăng nhận xét